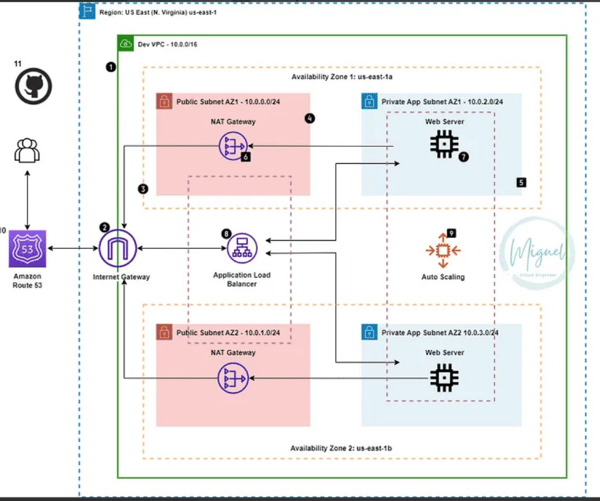

Deployed a highly available HTML website

01

In this project I deployed:

· VPC.

· Public and private subnets in 2 availability zones.

· IGW to allow public access through the internet.

· Route tables and associated them with the relevant subnets.

· NAT Gateway to allow instances in private subnets to access internet for patching and updates.

· Security Groups to control access to Webservers.

· ALB in the public subnet to distribute traffic to Webservers in private subnet.

· EC2 for Webserver application.

· Register a new Domain name in Route 53.

· Record set in Route 53 to point domain name to ALB.

· Register for SSL Certificate in AWS Certificate Manager.

· HTTPS(SSL) Listener for the ALB.

· Terminate EC2 and create an Auto Scaling Group, which contains configuration of EC2 instance.

02

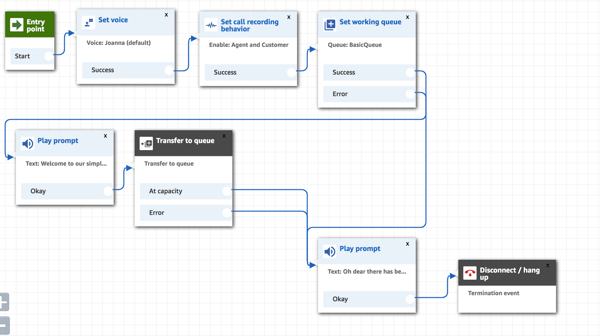

Amazon Connect call centre and connected it to Amazon Lex

Initially I set up a personal banker Chatbot for customers to check their bank balance, the chatbot asks for the customers PIN number and via a Lambda function with Javascript code retrieves the request, by adding intents and slot types the Chatbot can reliably interact with the customer and give them several options.

I then set up a contact centre on Amazon connect with a simple workflow, where an automated message advises a customer to hold whilst they connect to an agent, after integrating the Chatbot and making a few changes to the workflow, the customer then got the option of either using the Chatbot to check their balance for example or speak to an agent.

A few more steps and the interactions were then more customised to each caller, giving them a personal experience. This was a fun project and I gained valuable insight on Amazon Lex and Amazon Connect.

03

https://drive.google.com/file/d/1RIZt2kz0m7baeR-WyRbbSkRi7BJ73PY_/view

03

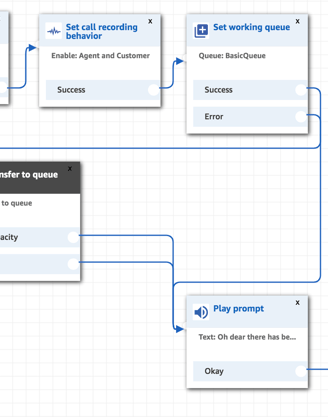

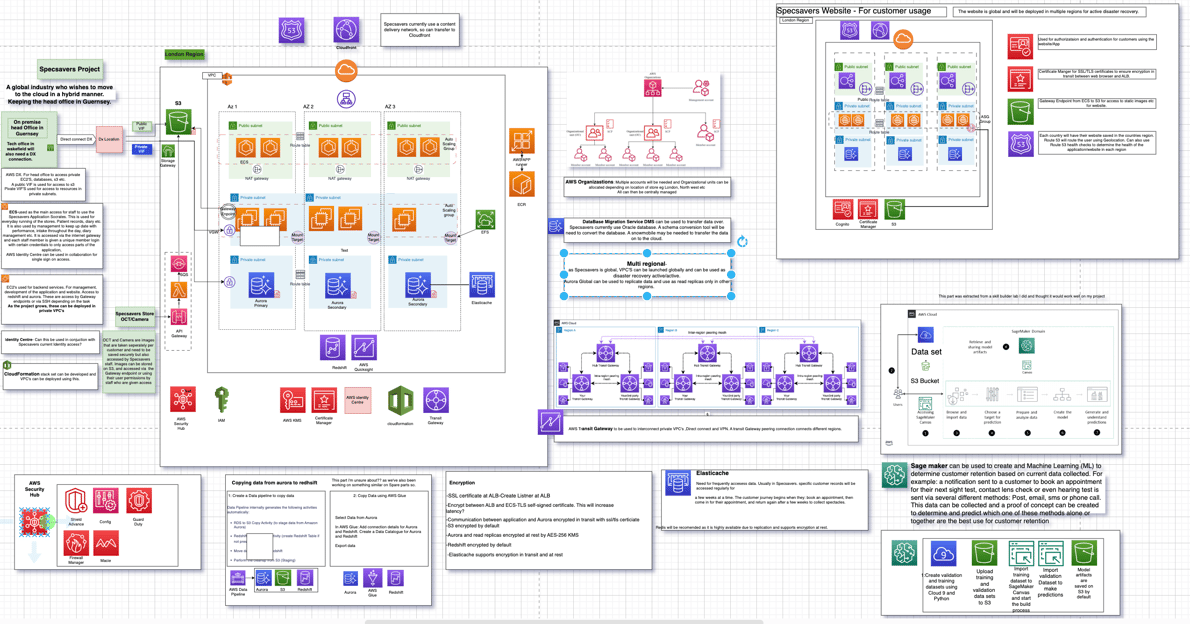

Specsavers Project

This project is an ongoing project. I have previously worked for Specsavers and after studying the AWS cloud I now understand how the infrastructure works and integrates, although having done extensive research I could not find specific details about the Specsavers infrastructure. So instead I decided to design my own concept.

I initially deployed a VPC for the staff in store to log in and use the Specsavers Socrates system to: book customer appointments, update records, take payments and for general managerial admin purposes. Theses systems would run on ECS in front of Aurora. Aurora have Aurora replicas in different regions for disaster recovery purposes. Each county/(area as Specsavers calls them) would have a separate VPC. These VPC's would also have an API Gateway to attach the store camera and photographs of the retina of the eye to be transferred to an S3 bucket.

The are two main offices at Specsavers: head office and the technical office. These offices would need a 'Direct Connect' connection and their own respectable VPC's.

This would have to be incorporated in AWS Organisations. Head Office would have access to the managerial Account and there would then be separate Organisational Units for: Tech team/Finance/ Store access / Head Office admin/website etc.

Security Hub can be used to incorporate Amazon GuardDuty, Macie, Inspector and third party security to centralise security. All accounts can be controlled centrally and you can assess your AWS environment against security best practise.

Data can be transferred from Aurora to Redshift for analytics. This would be used for business intelligence (it could vary from number of eye tests conducted throughout the day to stock replenishment. Real time analytics will also be needed using Kinesis for analytics such as: live conversion rates, store intake etc.

IAM and AWS Identity Center used for staff log in and access.

A website for customers to make appointments, order contact lenses and for marketing purposes will be set up in each of the 'area' VPC's. AWS Cognito can be used as a federated or identity provider for customers to log in. Each time a customer books an appointment: A SNS topic sends a notification to the relevant store and the database is updated via a lambda function for the appointment to show up on the relevant stores system.

Sagemaker is used for Machine Learning to help predict an efficient formula to help with customer retention.

This is an ongoing project which I update as my knowledge and expertise grow

04

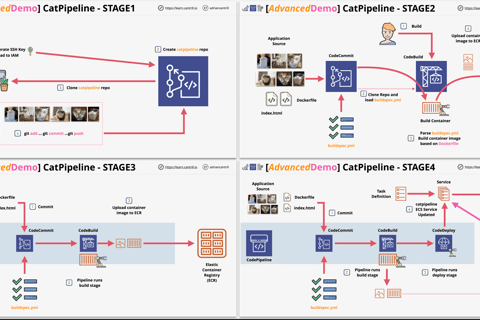

CI/CD Project

In this project I implemented a full code pipeline incorporating Code Commit, Build and Deploy. Other services used: CloudFront, EC2, ECS, IAM, Security Groups and ALB.

The outcome would be a full code pipeline which would automatically build and deploy and new container each time you commit new code into the Commit repository.

STAGE 1 : Configure Security, Create a CodeCommit repository and clone Repository to local machine

STAGE 2 : Configure CodeBuild to clone the repository, create a docker container with CloudFormation to create a container image and store on ECR.

STAGE 3 : Configure a CodePipeline with commit and build steps to automate build on Commit.

STAGE 4 : Create an ECS Cluster, Security Groups, ALB and configure the code pipeline for deployment to ECS Fargate.

05

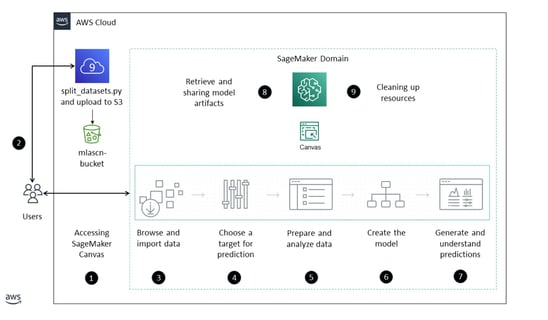

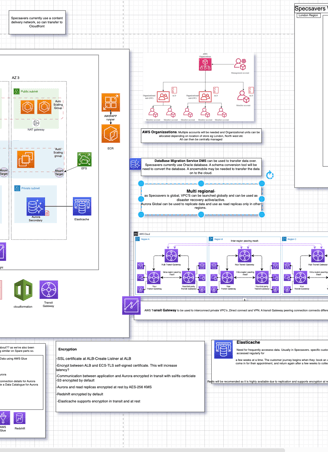

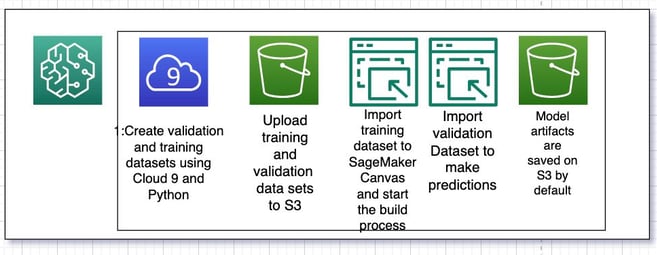

AWS Sagemaker

Using SageMaker canvas to create an ML model, to determine customer retention based on an email campaign for new products and services.

-Firstly, cloud 9 is used to create the training and validation datasets, the files are then uploaded to S3. - The training dataset files are then imported to SageMaker Canvas to initially start the build process and train the model. -Using the validation dataset the data is used to make predictions. -Canvas then uploads all artifacts to a default S3 bucket.

Skillbuilder lab